Performance Tuning

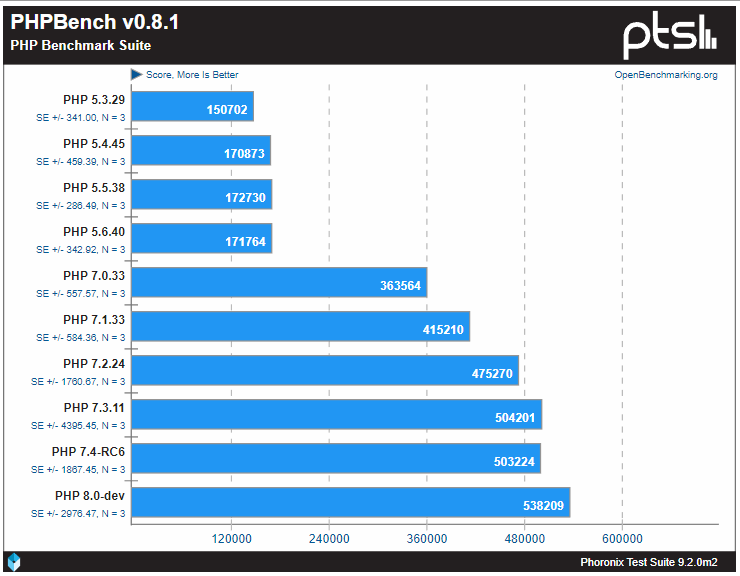

PHP 7

PHP 7 provides numerous performance benefits over PHP 5.x and in many cases can be over twice as fast. We recommend that all users migrate to PHP 7.

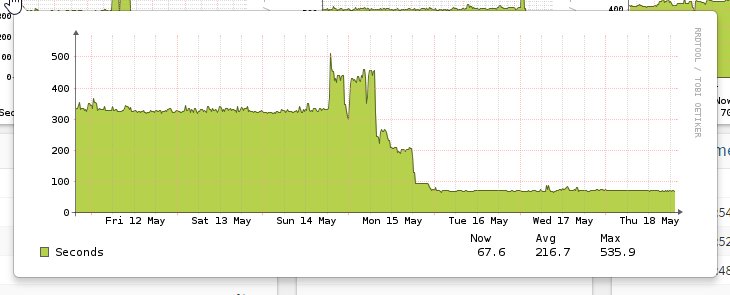

For large installations switching to PHP 7 will deliver an major speed boost in the UI. The image below gives an indication of the benefits.

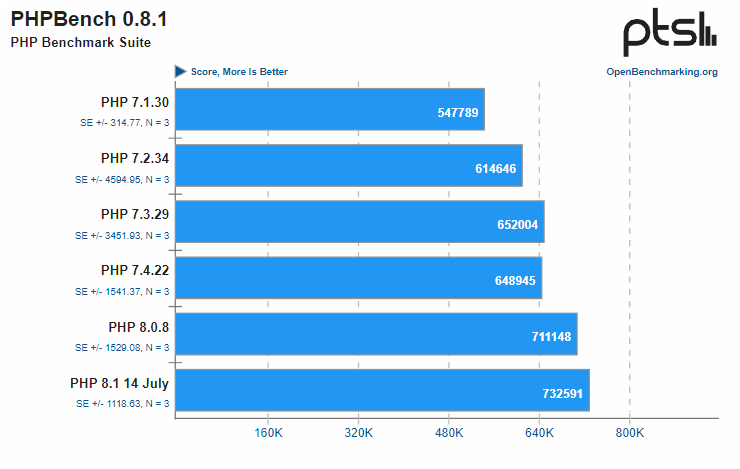

PHP 8

PHP 8 provides further performance improvements over PHP 7, but the language is actively evolving in PHP 8 and incompatibilities may be introduced as the development of the 8.x line develops.

Opcode caching

An opcode cacher compiles PHP code to opcode, which means it won't have to be re-interpreted every time you load a page or run a script. It will automatically detect if the code has changed and re-compile.

Starting with PHP 5.5 the Zend Opcache is integrated and shipped with PHP. PHP7 also includes Zend Opcache cache by default. For web user interface (WebUI) the in-memory Opcache enabled by default, but for cli scripts we need to enable the file-based opcache. This can also be used as a second level-cache in general and should increase performance if the PHP process gets restarted or it runs out of memory.

On Ubuntu/Debian-based systems this will be in /etc/php7.0/mods-available/opcache.ini, /etc/php/7.0/mods-available/opcache.ini or /etc/php5/mods-available/opcache.ini and on RHEL-based systems this would be in /etc/php.d/opcache.ini:

; configuration for php opcache module

zend_extension=opcache.so

opcache.enable=1

opcache.enable_cli=1

opcache.file_cache=/tmp/php-opcache

We need to ensure that this directory is created at boot and correctly managed by the system, on systemd systems you can create a file /etc/tmpfiles.d/php-cli-opcache.conf :

d /tmp/php-opcache 1777 root root 1d

Now create the temporary folder with:

systemd-tmpfiles --create /etc/tmpfiles.d/php-cli-opcache.conf

After running an Observium script or waiting for a cron job to run you should see files created in that directory:

root@zeta:~# tree /tmp/php-opcache/

/tmp/php-opcache/

└── c00813309ef797d6bb0a841f0126ed13

├── opt

│ └── observium

│ ├── alerter.php.bin

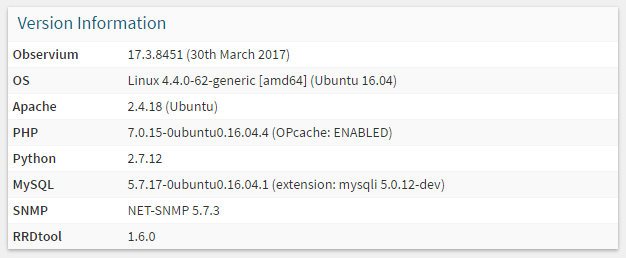

To check if Opcache is being used by the Web UI, go to the About page (in your WebUI) and see the "Version Information" panel on the "About Observium" page accessible from the right hand "Cog" menu. You should see "OPcache: ENABLED" together with PHP version.

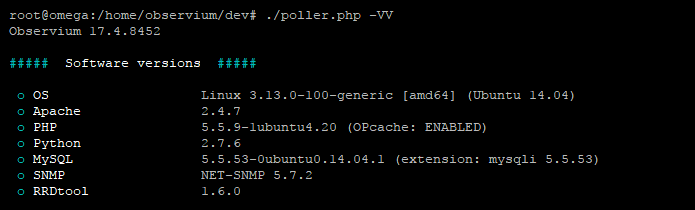

To check if Opcache is being used by the CLI, run the poller or discovery script with -VV, ie:

./discovery.php -VV

Fast userspace caching

Observium, since version 17.2.8348 includes an additional userspace cache mechanism, which can be used to speed up generation of pages on very large installations. This caching ONLY works with php version 5.5 and up, please see minimum requirements.

The Userspace caching is only recommended to assist scaling very large installations since it can delay data appearing in the web ui while data is in the cache.

By default it detects the best available way to cache user data on a server from: Zend Memory Cache, APCu, Sqlite, Files.

Currently you can use only single very fast in-memory caching driver by using the PECL-APCu extension. To use it, install and reload your apache server:

aptitude install php-apcu

And enable the cache in config.php or in the WUI configuration editor

$config['cache']['enable'] = TRUE;

To manually select the cache driver, set in config.php by:

$config['cache']['driver'] = 'auto'; // Driver to use for caching (auto, zendshm, apcu, xcache, sqlite, files)

To see debug information about caching, add the string "cache_info" at end of any url.

Multiple poller instances

One poller instance can only poll a finite quantity of devices in 5 minutes. Running multiple pollers in parallel will allow Observium do check more devices in the same amount of time. The install instructions set the poller to run two concurrent poller threads, which is only sufficient for small installs.

The number of pollers run by the poller-wrapper process can be configured in config.php or via the web-based configuration system. To configure the number of processes you need to ensure the number isn't hardcoded in the cron file:

*/5 * * * * root /opt/observium/observium-wrapper poller >> /dev/null 2>&1

Do note that increasing pollers will only increase performance until your MySQL database becomes the bottleneck, or more likely, when all the RRD writes to disk start to slow down the disk I/O.

If you try to run too many poller processes on storage without enough I/O, you'll simply cause disk thrashing and make the web interface slow. Ideally the entire poller-wrapper process should take as close to 300 second as possible to ensure the lowest average load.

Performance data for the poller can be seen on the "Polling Information" page at /pollerlog/ on your Observium installation.

Putting the RRDs on a RAM disk

See the separate Persistent RAM disk RRD storage page to find out how to set up a RAM disk with sync to the disks so you don't lose your data when your machine crashes/reboots.

Disable MySQL binary logging

Binary logging is enabled by default in MySQL 8.x and later.

Check if binary logging enabled on your MySQL connection:

/opt/observium/discovery.php -VV

##### DB info #####

o DB schema 484

o MySQL binlog ON

o MySQL mode NO_ENGINE_SUBSTITUTION

If MySQL binlog in state ON, follow this steps for disable it:

- Stop the MySQL server:

service mysql stop

- Edit your

my.cnf, for example/etc/mysql/my.cnfand set optiondisable_log_binin[mysqld]section:

[mysqld]

disable_log_bin

for MariaDB or older MySQL versions use `skip-log-bin` option:

* Start the MySQL server:

Separate disk for MySQL

When all the I/O for the RRDs is clobbering your disk, your MySQL database will likely become slow too, due to disk congestion. This slows down the web interface as well. It is advised to put the database on a separate disk (separate physical storage medium, not another LV on the same disk/RAID) for this reason.

Note that running your MySQL server on another machine increases the latency per query. When a lot of queries are done this could somewhat influence Observium's performance (however, moving MySQL to another machine than the one with all the RRD I/O could still prove to be a valuable enhancement).

Upgrade your uplink

Observium fires off a lot of SNMP queries to your devices. It has to wait for each reply to come back (per poller), before the poller can continue. If your uplink is congested, or latency to your devices is high, less devices can be polled in the same time frame. You could up the number of parallel pollers (if congestion is not the issue) or upgrade your uplink so more can fit through the pipe to remedy this somewhat (until we run into "speed of light in a fiber" issues).

SNMP Maximum Repetitions

SNMP allows you to set the max-repetitions field in the GETBULK PDUs. This specifies the maximum number of iterations over the repeating variables in each operation, potentially vastly increasing GETBULK speed. Speed improvements are greater the higher the RTT between the Observium server and the polled device. The default used by the Net-SNMP commandline utilities is 10.

Compatibility with larger than default max-rep values depends upon the device's SNMP stack. Devices using a Net-SNMP-based stack or Cisco IOS will often support high max-rep values, but using even moderate values like 30 will result in problems with some platforms.

Using a max-rep value of 100 on a Linux host can return a three-fold reduction in the time taken to collect a ~1500 entry hrSWRunTable :

root@observium:/opt/observium# time snmpbulkwalk -v2c -Cr10 test.observium.org hrSWRunTable | wc -l

1577

real 0m22.206s

user 0m0.012s

sys 0m0.004s

root@observium:/opt/observium# time snmpbulkwalk -v2c -Cr100 test.observium.org hrSWRunTable | wc -l

1575

real 0m7.259s

user 0m0.012s

sys 0m0.000s

Devices which large numbers of complex entities will usually benefit the most from max-rep, for example devices with large numbers of ports or load balancers with large numbers of configured virtual servers and service groups. Poller time has been observed to drop to 10-20% on F5 devices.

Observium includes predefined max-rep values for many OSes, but their use is disabled by default for compatibility reasons. To enable max-rep by default you can set the following setting in config.php.

$config['snmp']['max-rep'] = TRUE;

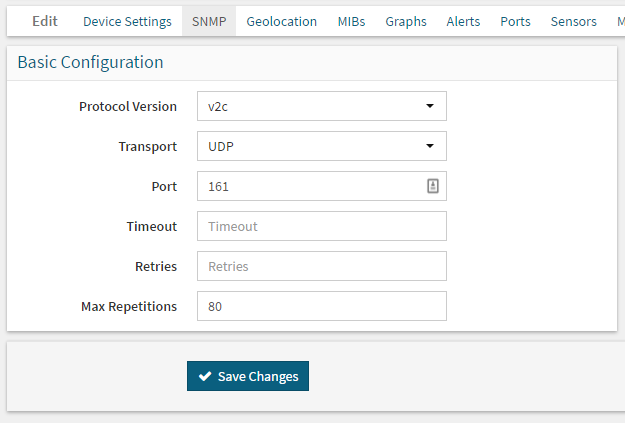

This can also be configured on a per-device basis where it's not desired to enable it globally but individual devices will benefit from it. The setting can be found in the SNMP section of the device settings page.

Housekeeping

It's highly recommended to run the housekeeping script frequently to reduce database clutter. Please see the Housekeeping page.